Pacing Technique (Part 2)

Table of Contents

Intermediate - This article is part of a series.

In this entry, we demonstrate a practical application of the technique presented in the previous blog post.

Note: In English, pacing is known as arrival rhythm, which is the term we will use in this article.

We are clarifying the concept we presented in the previous blog post: pacing applies to each component of the scenario individually. For example, if a session/flow is composed of 10 transactions (HTTP requests), each one will be executed 10 times, regardless of the particular response time for each transaction.

The Requirement #

Suppose this time the client requires a workload composed of two scenarios, each of which is made up of a certain number of steps (requests). The pacing requirement looks like this:

| Scenario | Pace | Percentage |

|---|---|---|

| One | 240 Visits per Minute | 66% |

| Two | 120 Visits per Minute | 33% |

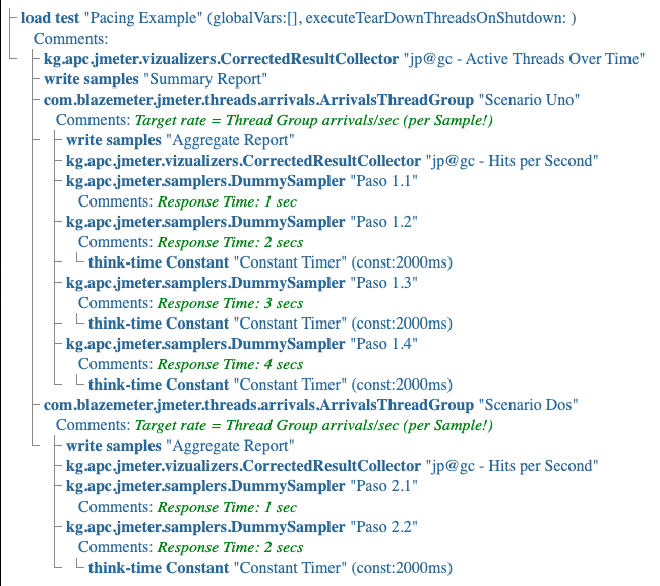

The following diagram illustrates the model with two thread groups, which correspond to each of the scenarios:

Each of the scenarios is configured as we demonstrated on our previous blog post.

Result and Analysis #

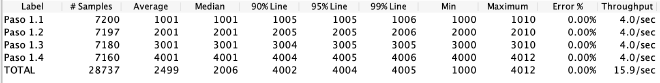

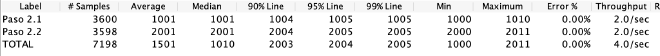

At the end of the test, the Aggregate Report Listener displays the following results for each scenario respectively:

We verified that the pacing goal of has been met using the same method described in a previous blog post.

Number of Active Users #

The total number of users is estimated using the same methodology mentioned in our previous blog post:

# active users = active users in process + active users on pause (ThinkTime)

# active users = (Throughput average * Response time average) + (Throughput average * Think time average)

Scenario One:

# active users = 15.9 * 2.5 + 15.9 * 1.5 ≈ 64

Scenario Two:

# active users = 4.0 * 1.5 + 4.0 * 1.0 ≈ 10

Note:: The distribution of the 74 active users is approximately 86% and 14% respectively.

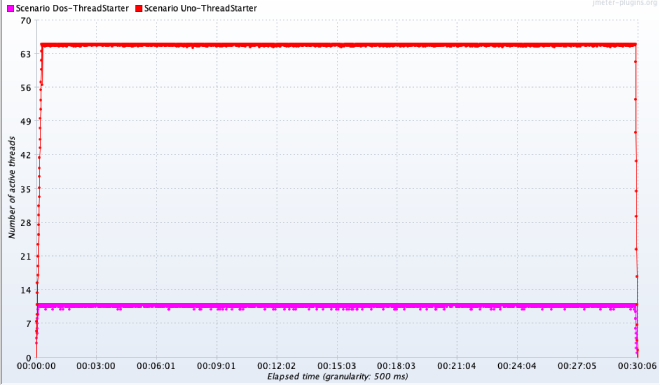

Graphically, the number of active users is as follows:

It is important to highlight a related observation regarding the total number of active users.

In the table where we indicate requirements, we note that the percentage of visits is distributed at 66% for Scenario One and 33% for Scenario Two. It intuitive would be to configure the VUsers according to this same distribution.

Unfortunately the pacing result would be incorrect! The 66% and 33% of active users (74) is approximately 48 and 26 respectively. This distribution of users does not meet the pacing requirement established by the client. As an exercise, I invite you to check that this is the case.

Conclusions #

In this article, we confirm that the Arrivals Threads Group is intuitive and relatively easy to configure even in the context of complex workload deployments. Additionally, this blog shows that configuring users without clearly understanding the workload characteristics can lead to incorrect results.